Roni McGuinness

AI Fundamentals

Artifical Intelligence AI

The landscape of artificial intelligence (AI) software has undergone a significant transformation, moving from deterministic, rule-based systems to probabilistic models. This evolution is largely attributed to machine learning, which empowers software to discern patterns directly from data. By training AI models on extensive datasets, like those found on the internet, they can effectively learn and replicate these patterns

AI models “trained” on a large chunk of internet documents. These models learn patterns during training.

Why the explosion now:

For decades , AI could only predict and understand Human speech at rate of 4 out 10 words

However with more and more data and GPUs the AI models, started to understand Linguistic , grammar, idioms and context.

Predicting the next word became easy, and understanding human speech's idiosyncrasies and foibles improved. This allowed AI to exponentially increase and morph into machine learning with Neural networks and now, Deep Learning.

- One New large language model LLM trained on Millions of Amazon reveiws

- unexpectedly came up with its own category “Sentiment”

Machine learning (ML) and the Rise of Large Language Models (LLMs)

You can think about machine learning as a subset of the broader AI category.

ML started out being trained on vast amount of data to recognize patterns.

This process led to the development of LLMs. LLMs focused on understanding and processing human language, including grammar, idioms, and predicting subsequent words or put simpy trying to predict the next word.

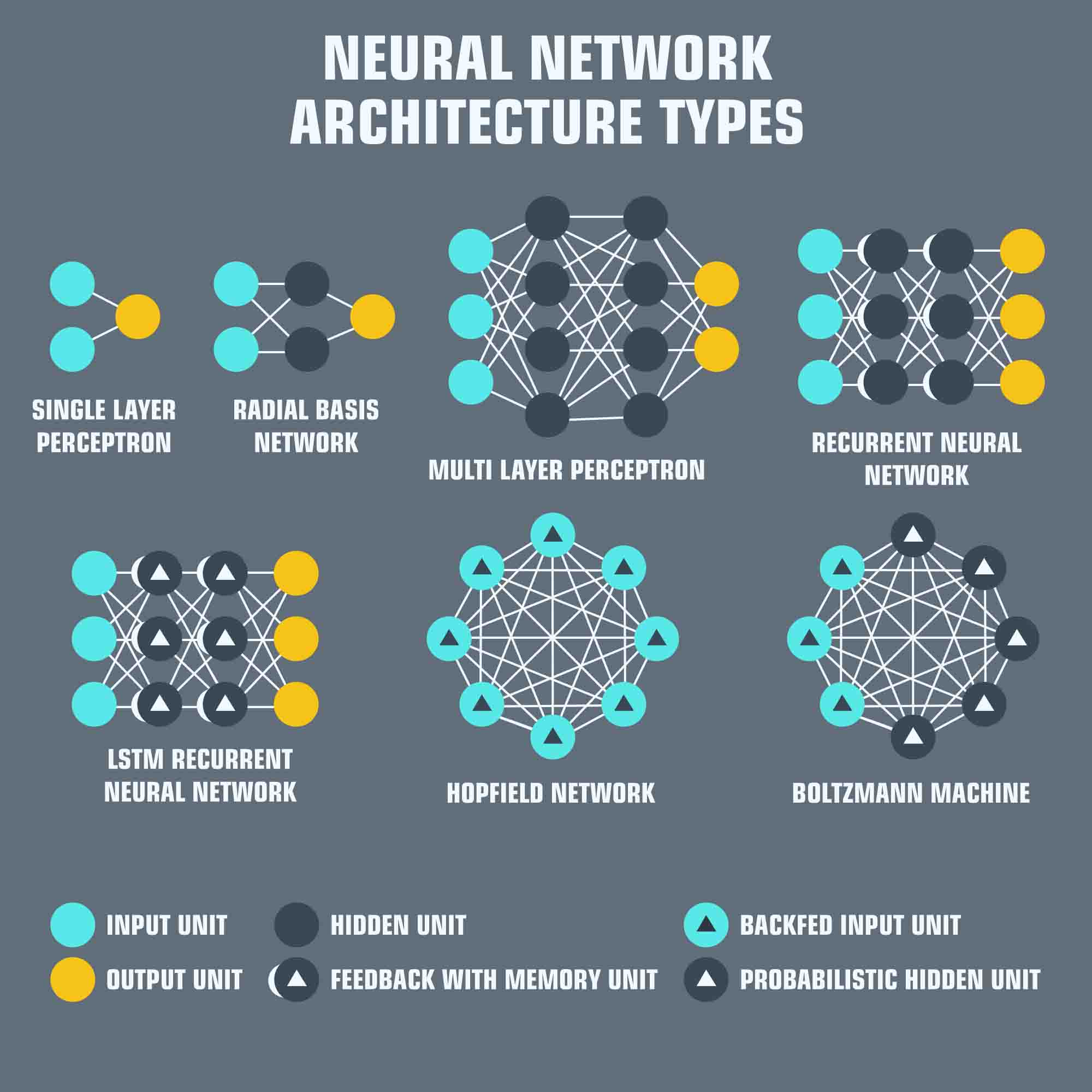

Neural Networks

AI models are built on neural networks "Similar to the Brain" — think of them as a giant web of decision-making pathways that learn from examples. Neural networks can be used for anything, but I'll focus on language models.

- An input layer where data enters the system. Input is converted into a numerical representation of words or tokens (more on tokens later).

- Many hidden layers that create an understanding of patterns in the system. Neurons inside the layer apply weights (also known as parameters) to the input data and pass the result through an activation function³. This function outputs a value, often between 0 and 1, representing the neuron's level of activation.

- An output layer which produces the final result, such as predicting the next word in a sentence. The outputs at this stage are often referred to as logits, which are raw scores that get transformed into probabilities.

Neural Network Training:

-

Training: The process of adjusting the weights in a neural network to minimize the error between predictions and true labels.

-

Loss Function: A mathematical function that measures the difference between predicted outputs and true labels.

-

Optimizer: An algorithm used to update the weights during training, such as stochastic gradient descent (SGD)

-

Artificial Neural Network (ANN): A type of neural network that mimics the human brain's neural structure and function to solve complex problems.

-

Convolutional Neural Network (CNN): A type of ANN designed specifically for image and signal processing tasks, using convolutional layers to extract features from data.

-

Recurrent Neural Network (RNN): A type of ANN that processes sequential data, such as time series or natural language, by maintaining internal state.

-

Generative Adversarial Networks (GANs): A type of deep learning model that uses two neural networks to generate new data samples that resemble existing data.

-

Transfer Learning: The process of reusing pre-trained models and their weights on new tasks or datasets, reducing the need for extensive training.

-

Backpropagation: An algorithm used to train neural networks by propagating errors backwards through the network to adjust weights and improve performance.

-

Neural Network: A computational model inspired by the structure and function of the human brain, composed of interconnected nodes (neurons) that process and transmit information.

Deep Learning:

Neural networks are the foundation upon which deep learning is built

Within Machine Learning, Deep Learning is a specialized subfield that utilizes artificial Neural Networks with multiple hidden layers. These networks are inspired by the human brain's structure, processing information hierarchically. Deep learning models excel at learning complex relationships in data." "The core principle of deep learning is to train models on extensive datasets, enabling them to recognize intricate patterns and make accurate predictions. The network's layers progressively extract and combine features from the input data, forming higher-level representations that facilitate complex relationship discernment and generalization to unseen data."

Key Features of Deep Learning

"Deep learning is characterized by several key features:" "Multilayer Architecture: Networks consist of multiple hidden layers for hierarchical feature extraction." "Representation Learning: Models transform raw data into increasingly abstract representations." "Non-Linearity: Hidden layers introduce non-linearity, enabling the modeling of complex functions." "Backpropagation: Models are trained using backpropagation to optimize performance." "Generalization: Models aim to generalize to unseen data by capturing underlying patterns."

AI Glossary

- Token: Letter/s or Word

- AI Inference: "Model as-is" approach

- Fine Tuning: "Model adaptation" approach

- deep learning models. neural networks

- Transformers = parallel computing

-

Fixed chunk size: A fixed number of chunks is used for all token types.

-

Dynamic chunk size: The number of chunks varies depending on the token type or its position in the sequence.

-

Chunk length adjustment: The length of each chunk is adjusted during training to optimize performance. 4* Artificial Intelligence (AI): The development of computer systems that can perform tasks that typically require human intelligence.

- Deep Learning: A subset of machine learning that involves the use of multiple layers of artificial neural networks to analyze and interpret data.

Fundamentals of Deep Learning

Deep learning is a subfield of machine learning that uses neural networks with multiple layers to learn complex patterns in data.

Key Concepts:

-

Artificial Neural Networks (ANNs):

- ANNs are composed of interconnected nodes (neurons) that process inputs and produce outputs.

- Each node applies an activation function to the weighted sum of its input values.

-

Deep Learning Architectures:

- Convolutional Neural Networks (CNNs): designed for image and video processing.

- Recurrent Neural Networks (RNNs): used for sequential data, such as text or audio.

- Long Short-Term Memory (LSTM) networks: a type of RNN that handles vanishing gradients.

-

Activation Functions:

- Sigmoid: outputs values between 0 and 1, often used in classification problems.

- ReLU (Rectified Linear Unit): outputs are either 0 or the input value, helping to reduce vanishing gradients.

- Tanh: similar to sigmoid but returns output values between -1 and 1.

-

Backpropagation:

- The algorithm that computes the error gradients in a neural network during training.

- Backpropagation is essential for optimizing the parameters of neural networks.

-

Gradient Descent:

- An optimization algorithm used to update weights in neural networks during training.

- Gradient descent minimizes the loss function by adjusting the weights based on their gradient.

-

Overfitting and Regularization:

- Overfitting occurs when a model is too complex and performs well on training data but poorly on new data.

- Regularization techniques, such as dropout and L1/L2 regularization, help prevent overfitting by adding a penalty term to the loss function.

Deep Learning Applications:

- Computer Vision:

- Image classification

- Object detection

- Image segmentation

- Natural Language Processing (NLP):

- Text classification

- Sentiment analysis

- Machine translation

- Speech Recognition: Automatic speech recognition systems Key Deep Learning Techniques:

- Transfer Learning: Using pre-trained models as a starting point for new tasks.

- Batch Normalization: Normalizing the inputs to layers in a neural network.

- Attention Mechanisms: Enabling models to focus on specific parts of the input data. By understanding these fundamentals, you'll be well-prepared to tackle common deep learning challenges and build your skills in this exciting field!